NodeSLAM

Neural Object Descriptors for Multi-View Shape Reconstruction

The choice of scene representation is crucial for both: the shape inference algorithms it requires and the smart applications it enables. We present efficient and optimisable multi-class learned object descriptors together with a novel probabilistic and differential rendering engine, for principled full object shape inference from one or more RGB-D images. Our framework allows for accurate and robust 3D object reconstruction which enables multiple applications including robot grasping and placing, augmented reality, and the first object-level SLAM system capable of optimising object poses and shapes jointly with camera trajectory.

Overview Video

Method

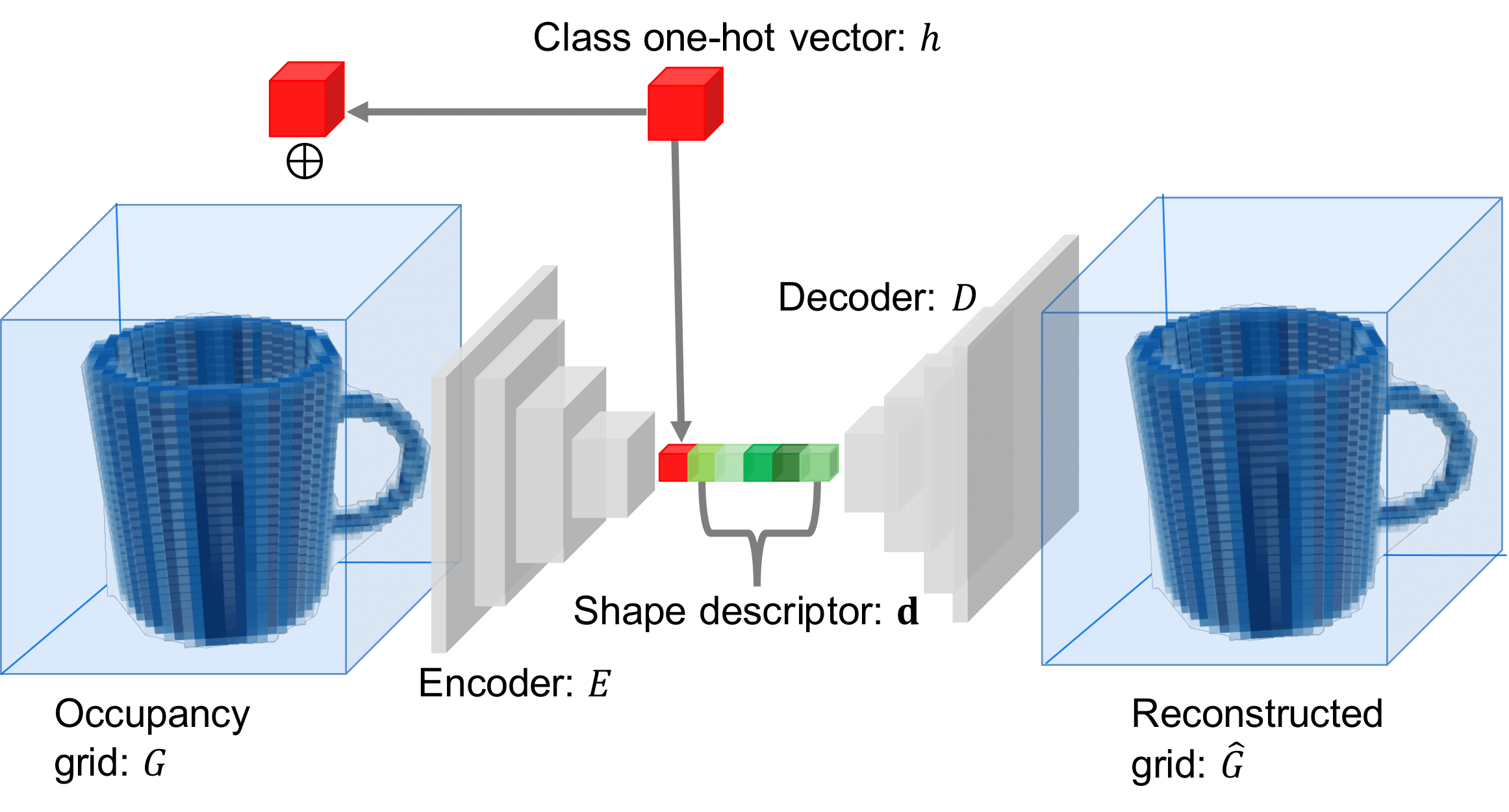

Aligned objects of the same semantic class exhibit strong regularities in shape. We leverage this to construct a class specific smooth latent space which allows us to represent the shape of an object with a small number of parameters. We do this by training a single Class-Conditional Variational Autoencoder neural network.

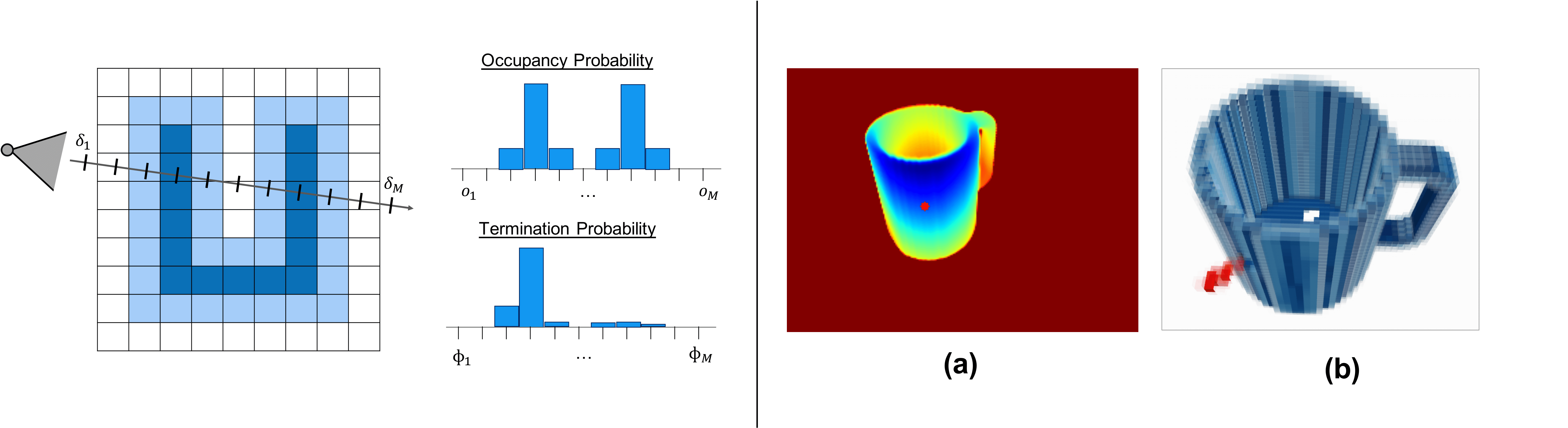

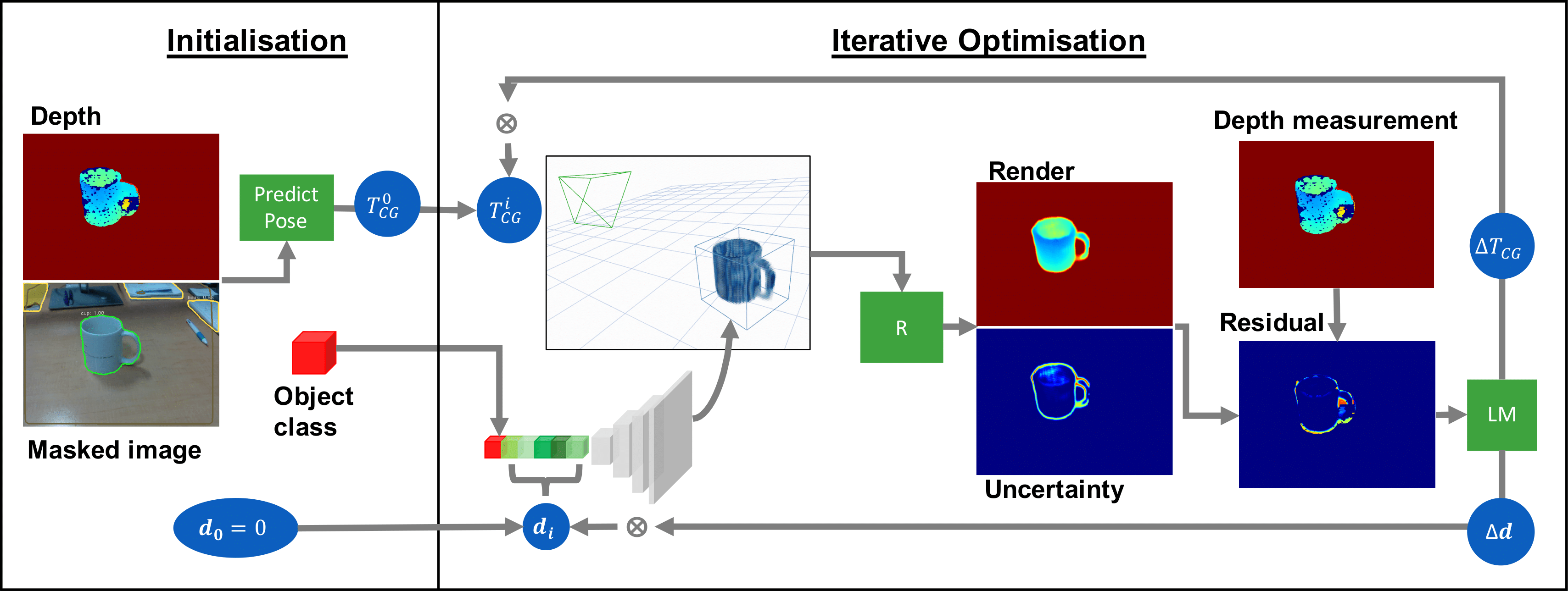

To be able to use a generative method in inference we need a measurement function. In our case we use depth images with object masks as measurements. We introduce a novel probabilistic and differentiable rendering engine for transforming object volumes into depth images with associated uncertainty.

This allows us to optimise an object shape represented by a compact code against one or more depth images to obtain a full object shape reconstruction. The combination of the object VAE with the probabilistic rendering engine allows us to tackle the important question of how to integrate several measurements for shape inference using semantic priors. The single object optimisation pipeline is shown next.

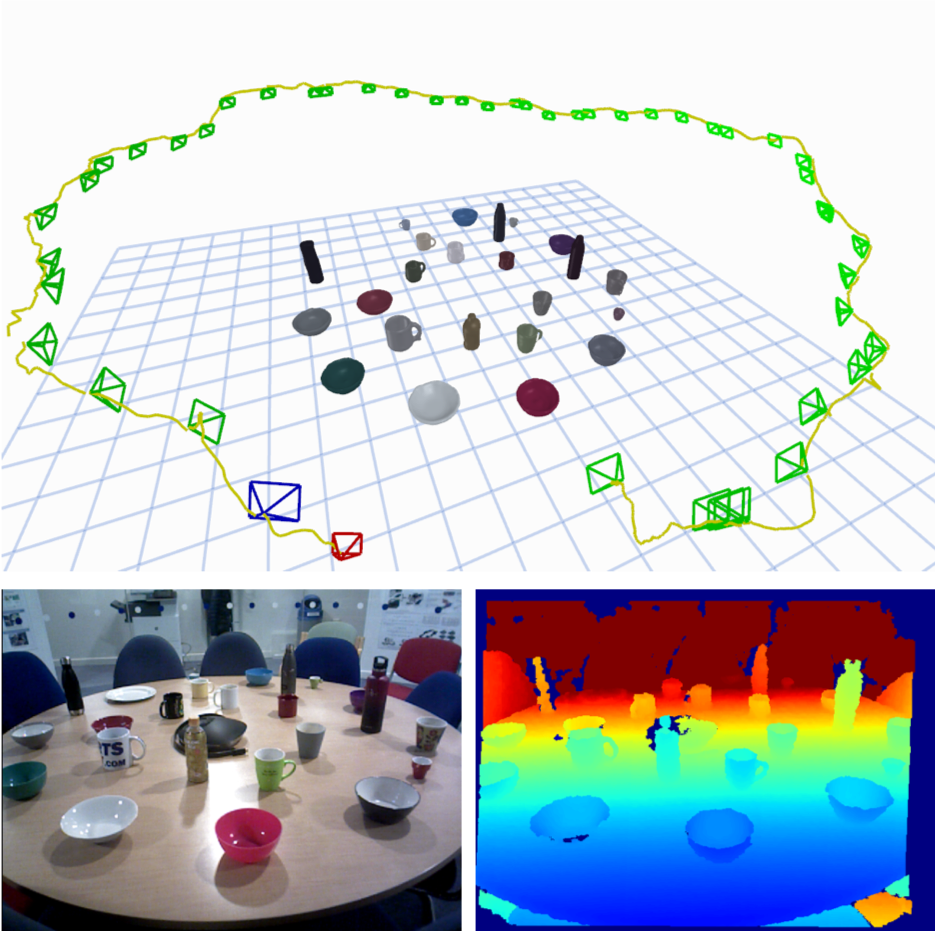

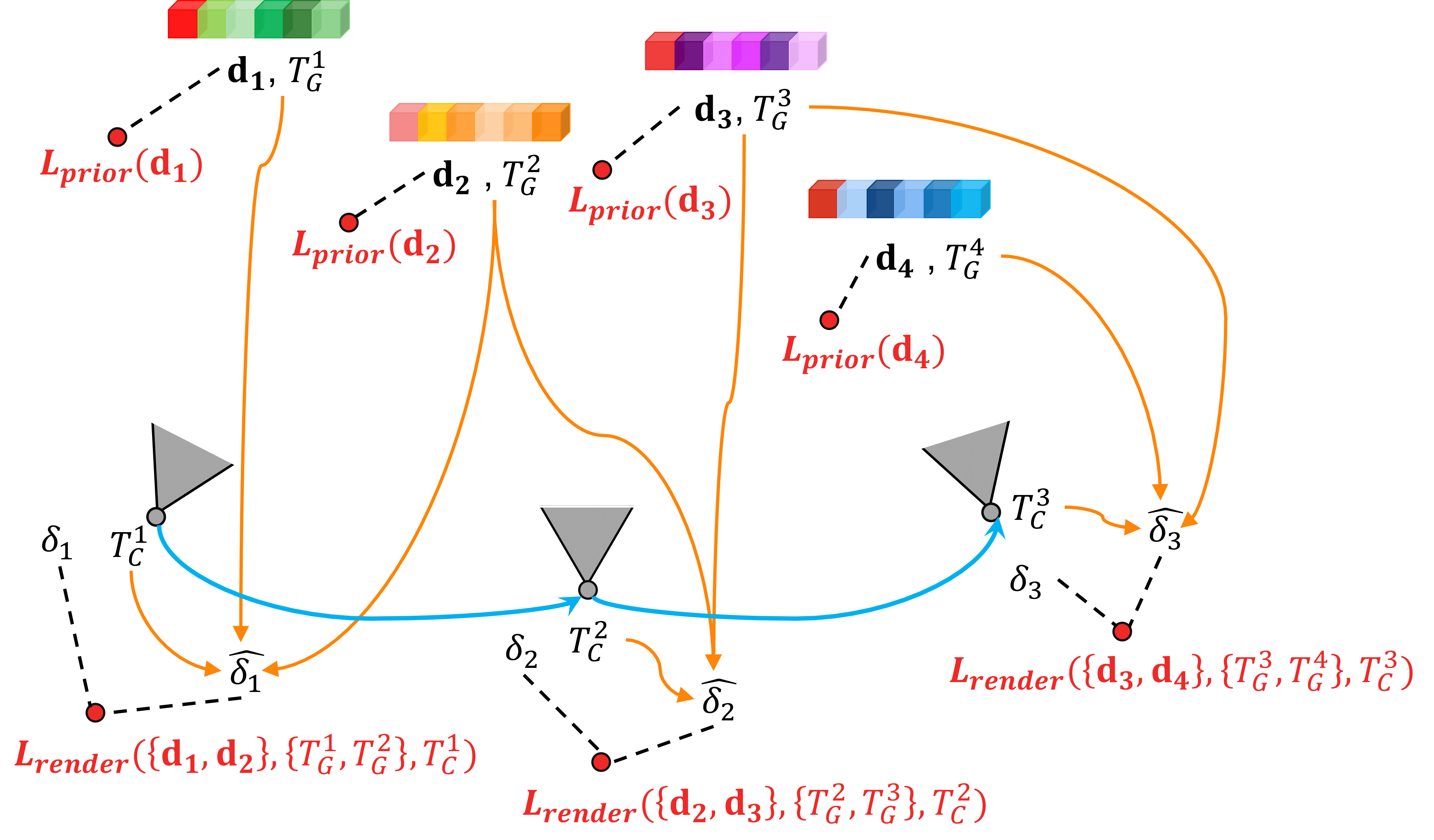

When it comes to building models of scenes with many objects and from multiple observations, our optimisable compact object models can serve as the landmarks in an object-based SLAM map. We build the first jointly optimisable object-level SLAM system, which uses the same measurement function for camera tracking as well as for joint optimsation of object poses and shapes, and camera trajectory. On the image below we show a graph of shape descriptors and camera poses which is jointly optimised in a sliding window manner.

Shape Optimisation

Object-Level SLAM

Desk Sequence

Circular Table Sequence

Robotic Manipulation

Object Packing

Object Sorting

Additional Demonstrations

Few-Shot Augmented Reality

Water Pouring

Bibtex

@inproceedings{Sucar:etal:3DV2020,

title={{NodeSLAM}: Neural Object Descriptors for Multi-View Shape Reconstruction},

author={Edgar Sucar and Kentaro Wada and Andrew Davison},

booktitle={Proceedings of the International Conference on 3D Vision ({3DV})},

year={2020},

}

Contact

If you have any question, please feel free to contact Edgar Sucar